JOCL texture generation

12

12

|

Hi,

I am looking for an example on how to setup a buffer/image to generate an RGBA texture with JOCL. On my hardware Intel HD4600 CL-GL-interop does not seem to work. I am currently trying to read-back the buffer/image and define the texture from it. Buffer definition: CLBuffer<ByteBuffer> texBuffer = clContext.createByteBuffer(bufferWidth*bufferHeight*4, CLBuffer.Mem.WRITE_ONLY); Texture definition after read-back of texBuffer: gl.glTexImage2D (GL3.GL_TEXTURE_2D, 0, GL3.GL_RGBA8, bufferWidth, bufferHeight, 0, GL3.GL_RGBA, GL3.GL_UNSIGNED_BYTE, texBuffer.getBuffer()); kernel: __kernel void function ( __global const float* height, __global const float* pvec, __global unsigned char* store, unsigned len, unsigned w, unsigned h ) Now what is the correct order of RGBA components in "unsigned char* store"? Or is it better to use "uint*" with "type=GL_UNSIGNED_INT" in JOGL? Thanks a lot! |

|

Administrator

|

Do you have a compilable, small, self-contained piece of code that we could use for a test? It doesn't look like we have a test of OpenGL textures written by OpenCL, so I'd be keen to add it to our suite if you've got something we can debug.

We do have a test of a shared GL_VERTEX_ARRAY at https://github.com/JogAmp/jocl/blob/master/test/com/jogamp/opencl/gl/CLGLTest.java which might be helpful to you, but it doesn't write the buffer from OpenCL, it just verifies that the contents match the OpenGL contents. |

|

Thanks! I can upload my small test program for copying an CL image to an GL RGBA texture.

I could make the host copy work now. The problem was not with the copy but with preparing the VBO for rendering the textured quad!!!

private ByteBuffer clone (float[] data)

{

int len = data.length;

ByteBuffer direct = ByteBuffer.allocateDirect(len*4);

direct.order(ByteOrder.nativeOrder()); // very important!

for (int i=0; i<len; ++i) {

direct.putFloat(data[i]);

}

direct.rewind();

return direct;

}

I left out the direct.order call on the ByteBuffer.

I am not sure, if there is a util function for this task! Still, I could not make the GL texture object sharing work. clContext2.createFromGLTexture2d gives me an exception:

Exception in thread "AWT-EventQueue-0" com.jogamp.opencl.CLException: unknown ca

use: code 201779856 [error: unknown]

at com.jogamp.opencl.CLException.checkForError(CLException.java:67)

at com.jogamp.opencl.gl.CLGLTexture2d.createFromGLTexture2d(CLGLTexture2

d.java:66)

at com.jogamp.opencl.gl.CLGLContext.createFromGLTexture2d(CLGLContext.ja

va:284)

at com.jogamp.opencl.gl.CLGLContext.createFromGLTexture2d(CLGLContext.ja

va:272)

at DemoViewer.initCL(DemoViewer.java:334)

at DemoViewer.init(DemoViewer.java:193)

|

|

Administrator

|

When you have a single, self-contained .java file you can upload, just put it here and I'll give it a try.

|

|

Thanks! Here it is!

GL_INTEROP=false, host copy works for me GL_INTEROP=true, CL-GL interop gives an exception using my Intel HD4600 device. DemoViewer.java |

|

Administrator

|

It looks like your CL kernel code isn't syntactically correct. For an "int2 ic" you can't say ic[0], you have to say ic.x or ic.s0. How could this program even run for you?

Here are the errors: Here are the errors:

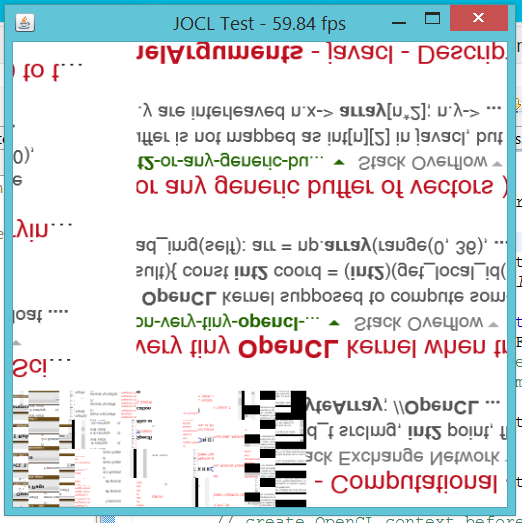

Exception in thread "AWT-EventQueue-0" com.jogamp.opencl.CLException$CLBuildProgramFailureException: CLDevice [id: 457290976 name: GeForce GTX 660 type: GPU profile: FULL_PROFILE] build log: :6:5: error: subscripted value is not an array, pointer, or vector ic[0] = x; ^ ~ :8:5: error: subscripted value is not an array, pointer, or vector color[0] = color[1] = color[2] = 0; ^ ~ :9:5: error: subscripted value is not an array, pointer, or vector color[3] = 255; ^ ~ :12:8: error: subscripted value is not an array, pointer, or vector ic[1] = y/aspect; ^ ~ :16:5: error: subscripted value is not an array, pointer, or vector color[0] = 255; ^ ~ :17:5: error: subscripted value is not an array, pointer, or vector ic[1] = x*aspect; ^ ~ [error: CL_BUILD_PROGRAM_FAILURE] at com.jogamp.opencl.CLException.newException(CLException.java:79) at com.jogamp.opencl.CLProgram.build(CLProgram.java:392) at com.jogamp.opencl.CLProgram.build(CLProgram.java:230) at com.jogamp.opencl.gl.CLGLTextureGenTest.initCL(CLGLTextureGenTest.java:337) at com.jogamp.opencl.gl.CLGLTextureGenTest.init(CLGLTextureGenTest.java:187) If you correct those errors, you get this console output: NVIDIA CUDA platform: GeForce GTX 660 0/1/2 1/32, 0/32 {CLDevice [id: 453555424 name: GeForce GTX 660 type: GPU profile: FULL_PROFILE]=BUILD_SUCCESS} true CLDevice [id: 453555424 name: GeForce GTX 660 type: GPU profile: FULL_PROFILE] build log: <empty> cl buffer type: GL_OBJECT_TEXTURE2D shared with gl buffer: 1 cl initialised And this graphical output:  The window contents are chopped up bits of a recent web search I did, so I assume it's showing uninitialized GPU memory contents. Is this what you expect to see? |

|

Hmh, the array notation compiles on the Intel without complaint. But no sizzling.

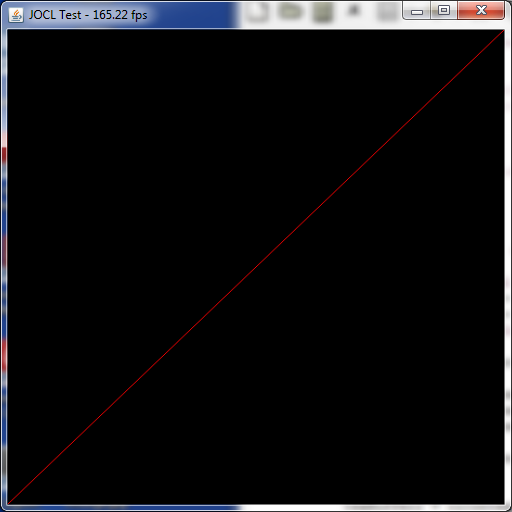

Here is what I expect, a diagonal red line, and what I get with GL_INTEROP==false:

Intel(R) OpenCL platform: Intel(R) HD Graphics 4600

No errors.

No errors.

No errors.

1102/1101/1103

1/32, 0/32

{CLDevice [id: 1667484832 name: Intel(R) HD Graphics 4600 type: GPU profile: FULL_PROFILE]=BUILD_SUCCESS}

true

CLDevice [id: 1667484832 name: Intel(R) HD Graphics 4600 type: GPU profile: FULL_PROFILE] build log:

fcl build 1 succeeded.

fcl build 2 succeeded.

bcl build succeeded.

texture-size=1048576

cl initialised

Could you try GL_INTEROP==false in comparison? |

|

Administrator

|

Yes, GL_INTEROP = false works correctly for me. GL_INTEROP = true looks like we're just looking at uninitialized graphics memory, which implies the OpenCL code isn't writing to it. I'll look closer to see if there's anything obviously wrong. But I don't get any exception, it seems to run fine.

|

|

Thanks again.

Just to make sure, the source once again DemoViewer.java CL program

__kernel void function (__write_only image2d_t store, unsigned w, unsigned h )

{

unsigned x = get_global_id(0);

float aspect = h/(float)w;

int2 ic;

ic.s0 = x;

uint4 color;

color.s012 = 0;

color.s3 = 255;

// clear image

for (uint y=0; y<h; ++y) {

ic.s1 = y;

write_imageui(store, ic, color);

}

// set red pixel on diagonal (no anti-aliasing)

color.s0 = 255;

ic.s1 = x*aspect;

write_imageui(store, ic, color);

}

|

|

Administrator

|

I looked into this for a couple of hours today, and there's nothing obviously wrong. All the OpenCL calls are returning the right codes, everything seems to be in the right order, but modifying the CLGLTexture2d just doesn't modify the GL texture, which is left uninitialized. Tomorrow I'll dig into your exception a bit, maybe the Intel driver returns an error that the Nvidia driver doesn't catch, which could help make sense of this. The error code "201779856" doesn't appear in any OpenCL docs that I can find (not in hex either), so I'm not quite sure what to make of it yet.

|

|

Administrator

|

Tried on Mac OS X 10.9.2, got a black window for both Nvidia OpenCL and the Intel CPU OpenCL, both with GL-CL interop on and off. It does show a warning about a pre-existing GL error code 0x502 (GL_INVALID_OPERATION), so I'm going to try checking errors on all GL calls to see if something is slipping through the cracks.

|

|

Hi Wade,

thanks for your help! The program contains

drawable.setGL(new DebugGL3(drawable.getGL().getGL3()));

so GL errors should be reported immediately after they occur, shouldn't they?

I updated my NVidia driver to version 335.23 of 3/10/2014. The NVidia device now works as the Intel device, still the exception with GL_INTEROP==true:

NVIDIA CUDA platform: GeForce GTX 765M

0/1/2

1/32, 0/32

{CLDevice [id: 198122744 name: GeForce GTX 765M type: GPU profile: FULL_PROFILE]

=BUILD_SUCCESS}

true

CLDevice [id: 198122744 name: GeForce GTX 765M type: GPU profile: FULL_PROFILE]

build log:

<empty>

Exception in thread "AWT-EventQueue-0" com.jogamp.opencl.CLException: unknown ca

use: code 207432328 [error: unknown]

at com.jogamp.opencl.CLException.checkForError(CLException.java:67)

at com.jogamp.opencl.gl.CLGLTexture2d.createFromGLTexture2d(CLGLTexture2

d.java:66)

at com.jogamp.opencl.gl.CLGLContext.createFromGLTexture2d(CLGLContext.ja

va:284)

at com.jogamp.opencl.gl.CLGLContext.createFromGLTexture2d(CLGLContext.ja

va:272)

at DemoViewer.initCL(DemoViewer.java:351)

I found an old thread from 2011 about "createFromGLTexture2d", which reports the same exception: http://forum.jogamp.org/cl-createFromGLTexture2d-is-not-working-td2694449.html |

|

Administrator

|

I still don't get the exception, but it just silently doesn't work. I upgraded my drivers to the latest also, and still no luck. I also tried writing a different test that just checks to see if OpenCL can read a texture that OpenGL creates, and that seems to work, bizarrely:

int[] id = new int[1];

gl.glGenTextures(id.length, id, 0);

gl.glActiveTexture(GL2.GL_TEXTURE0);

gl.glBindTexture (GL2.GL_TEXTURE_2D, id[0]);

ByteBuffer bufferGL = Buffers.newDirectByteBuffer(new byte [] {

(byte)0, (byte)5, (byte)10, (byte)0xff,

(byte)15, (byte)20, (byte)25, (byte)0xff,

(byte)30, (byte)35, (byte)40, (byte)0xff,

(byte)45, (byte)50, (byte)55, (byte)0xff});

bufferGL.rewind();

gl.glTexImage2D(GL2.GL_TEXTURE_2D, 0, GL2.GL_RGBA, 2, 2, 0, GL2.GL_RGBA, GL2.GL_UNSIGNED_BYTE, bufferGL);

gl.glBindTexture(GL2.GL_TEXTURE_2D, 0);

gl.glFinish();

ByteBuffer bufferCL = Buffers.newDirectByteBuffer(2*2*4);

CLGLTexture2d<ByteBuffer> clTexture = context.createFromGLTexture2d(bufferCL, GL2.GL_TEXTURE_2D, id[0], 0, CLBuffer.Mem.READ_ONLY);

// assertEquals(bufferGL.capacity(), clTexture.getCLCapacity());

// assertEquals(bufferGL.capacity(), clTexture.getCLSize());

CLCommandQueue queue = device.createCommandQueue();

queue.putAcquireGLObject(clTexture)

.putReadImage(clTexture, true)

.putReleaseGLObject(clTexture);

while(bufferCL.hasRemaining()) {

byte bGL = bufferGL.get();

byte bCL = bufferCL.get();

assertEquals(bGL, bCL);

}

The test isn't perfect, though -- notice the commented asserts will fail, because the OpenCL call to determine the size of the CL image returns 0 for some reason. I tried a bunch of other stuff too, like making sure the GL texture was "complete", but haven't hit on the right thing yet. I'll keep trying more stuff to try to figure this out. It may be time to write a C test to make sure that this even works on Nvidia cards at all. |

|

Administrator

|

Still working on this, just haven't had a chance to get to it the last couple of days. Next I'm going to try querying the OpenCL texture object to see if its properties are correct, then I'll try writing the same program in C to see if that behaves any different.

|

|

Administrator

|

Retried this on OS X last night, and figured out how to make it work properly. I had to change the texture2D() call in the fragment shader to texture(), since texture2D has been deprecated (this gets rid of the GL error x502 I had been seeing). Now I get the correct output on Mac for both the Nvidia GeForce 320M OpenCL device and the Intel OpenCL device. I'll try this on a PC tonight after work and see if that makes any difference there.

|

|

Hi Wade,

great! You are right, GLSL version 150 requires "texture" instead of "texture2D". But the problem initially and still is "createFromGLTexture2D". OpenCL 1.0 Specification says http://www.khronos.org/registry/cl/sdk/1.0/docs/man/xhtml/clCreateFromGLTexture2D.html "texture : .. Only GL texture objects with an internal format that maps to appropriate image channel order and data type specified in the table of supported Image Channel Order Values and the table of supported Image Channel Data Types at cl_image_format may be used to create a 2D image object. " Perhaps should try some different format than GL_RGBA, GL_UNSIGNED_BYTE with GL! It is strange that Intel and NVidia platform show the same unknown error code! |

|

Administrator

|

I have tried a few combinations here, but GL_RGBA, GL_UNSIGNED_BYTE is one of the ones that people seemed to recommend :( On my Mac, Intel and Nvidia both work! And on my PC, neither one throws the error that you're showing. Have you checked to see that your device even supports texture sharing? Either check device.isGLMemorySharingSupported()or do this: platform = CLPlatform.getDefault(glSharing(drawable.getContext())); device = platform.getMaxFlopsDevice(CLDeviceFilters.glSharing()); If your device doesn't support, that would explain the error :) I'll try with the Intel drivers on my PC and see what result that gives. But on the good side, we know the code works on at least two devices, so there can't be anything too wrong with it. |

|

Administrator

|

OK, now the story gets more interesting :) I installed the Intel OpenCL driver on my PC, set the demo to pick the Intel OpenCL device, and ran it. It crashes the JVM when the OpenCL kernel is invoked. But that actually makes sense -- the GL context is from my Nvidia card, and the CL context is from my CPU, and the two don't physically share any memory chips, so I'm handing the Intel OpenCL driver an unreachable pointer to GPU memory, which causes a crash on write.

So wait, then how did the Intel OpenCL driver work on my Mac? It's got Nvidia graphics too, not Intel integrated graphics. Aha, but the Nvidia GeForce 320M on the MacBook Air is a low-end card that doesn't have dedicated video memory, it shares memory with the CPU. So Intel CL-GL sharing works fine, because it's all the same memory. And it turns out Nvidia GL-CL sharing wasn't working on the Mac after all, it's just that the Mac will give you a pointer to the same video memory across multiple runs. Since I wasn't initializing the texture to anything, the Nvidia runs would get the texture from when I ran on the CPU, which was still correct-looking. Initializing the texture shows that the GPU isn't successfully writing it from OpenCL. So, next steps are still to try this in C++, and to try this on an AMD card (I've got one lying around, it's just a pain to install in my machine since I have to manually remove the drivers afterwards). |

|

Administrator

|

Is there a programmatic way of forcing the use of a particular GPU? I thought NV GPU affinity API would help.

Julien Gouesse | Personal blog | Website

|

|

Administrator

|

In reply to this post by Wade Walker

I'm halfway done writing a Qt-based C++ OpenGL/OpenCL texture interoperability test to see if this feature works at all. I've got OpenCL interop contexts creating, I just need to put a texture on my object and try writing to it from OpenCL. If it works, then I'll have a reference to debug the JOCL against, and if not, then we'll know that it just doesn't work on Nvidia systems yet.

|

«

Return to jocl

|

1 view|%1 views

| Free forum by Nabble | Edit this page |