[SOLVED] mouse input

|

This post was updated on .

Hi,

I currently implementing camera control via mouse in a usual first person way. My current solution works but the camera rotation is not smooth :( I gues it's because I use AWT functions to read the mouse position. I've read some articles and some of them use GLUT to handle the mouse motion: glutPassiveMotionFunc and glutMotionFunc Unfortunately I can't find these functions in com.jogamp.opengl.util.gl2.GLUT. Here is the way a currently do it: AWT MouseMotionListener * read mouse position * add x and y distance from center to internal dx, dy * recenter mouse via Robot (if moved) Input Thread (10ms sleep, high priority) * read and reset dx and dy * apply dx and dy to camera rotation It runs a bit smoother if I change the MouseMotionListener a bit: * read mouse position * add x and y distance from last mouse position to internal dx, dy * if mouse position is outside a defined region recenter it in that region via Robot If I imagine this movement in some EGO-Shooter it would be unplayable :( I've read about input handling abilities of LWJGL (maybe it will work better) but I don't want to switch just because of input issues cause I realy like the design of JOGL. It would be nice if someone can help me to solve this problem. |

|

Administrator

|

One possible problem is the recentering of the mouse via Robot -- doing that in the MouseMotionListener could generate a cascade of mouse move events (since the event handler is generating more of the same type of event). Try turning off recentering to see what effect it has on your smoothness.

Another possibility: maybe the non-smooth motion is due to the fact that a mouse position change has to go through two threads (maybe three) before it has an effect on the rendered scene: - (AWT GUI thread): save dx, dy - (input thread): apply dx, dy to camera matrix - (rendering thread?): draw scene The time between mouse move and screen update could vary unpredictably between 1 and 3 times the maximum thread period  And this is the best case -- if the AWT event queue gets too full, clearing out the backlog may introduce further variation in update times. And this is the best case -- if the AWT event queue gets too full, clearing out the backlog may introduce further variation in update times.

I'd try removing at least the input thread -- let the MouseMotionListener change the camera matrix directly. It may take some tweaking to get a multithreaded app to render smoothly. Without knowing exactly how you've designed it, it's hard to say for certain. |

|

Hi..

thx for your answer.. I tried it without thread already, but it didnt changed anything. I found a solution which works great: I don't track mouse movement via AWT anymore. I found a library called JInput. It gives me direct access to raw mouse input (or any other controller). My current solution works as follows: Input Thread (10ms) - collect and use mouse movement information - recenter mouse via Robot AWT MouseMotionListener is not needed anymore. Here is the JInput forum where I found a starting guide: http://www.java-gaming.org/index.php?board=27.0 |

|

Administrator

|

Hi

You don't need JInput to do it. I use AWT with JOGL in my own first person shooter and it works. I do it too in Ardor3D. Even NEWT can do it fine (but be aware of the bug 525). JInput does not work on some machines because of a lack of rights, use it with care. Best regards. Edit.: I use AWT Robot too and I don't need it anymore with NEWT, see GLWindow.warpPointer(int x, int y). Your solution is far too complicated, mine does not require a new thread...

Julien Gouesse | Personal blog | Website

|

|

you are right, JInput is a bit more complicated and I had to change permissions for /dev/input/event* but since it should just run for the moment it's okay :)

But JInput has the advantage that I can use a gamepad for game-controlling. JInput is also independet from the manipulation (smothing, acceleration) of mouse-movement which is maybe done by the GUI (I think, because it uses direct input data). Maybe the AWT solution shows such a bad performance is because my main thread is realy bussy. I just run at < 20fps because I use glVertex commands a lot (I know how to tweak this, it's just testing code). Putting the input thread to highes priority changed nothing. Do you know if the permission problems of JInput are also present on Win7? |

|

Administrator

|

Hi

According to the title of this thread, I have to say that JInput does not solve your problem any better than NEWT. I know it allows the use of joypads and various controllers but it has some annoying limitations. Your AWT solution might be slow, mine is used in a real first person shooter and it worked even before I switched to vertex arrays and VBOs in 2007. Michael Bien and "Riven" helped me to write this, feel free to reuse this piece of code even in non GPL source code: http://tuer.svn.sourceforge.net/viewvc/tuer/alpha/main/GameMouseMotionController.java?revision=378&view=markup This permission problem is not on Windows except on some machines when the user has no administration rights. A better solution consists in using the AWT plug-in of JInput if you need to use the mouse and the keyboard. You should use JInput directly only for joypads and in this case changing the permissions of some files is not required on a lot of Linux distros (as joypad data are not considered "sensible", they are accessible for any user). If you don't really need to use joypads yet, even using directly AWT will cause you less trouble, NEWT will give a very little bit better results (but be aware of the bug 525). In the future, I will need to integrate JInput in NEWT, I will probably write a NEWT plug-in for JInput so that we can use JInput with NEWT, it will allow to use both the keyboard, the mouse and the joypads with NEWT without AWT with excellent performances without having to modify permissions of some files. I suggested a fix for the bug 525, you can try to rebuild JOGL with this fix, it should work fine. Good luck.

Julien Gouesse | Personal blog | Website

|

|

Hi.. I reviewed your code and it handles input exactly like I did in my first step. Maybe I switch back to AWT and try it again. For now I'm really happy with JInput. Thx for your hints!

|

|

Administrator

|

I have just forgotten something, I use PointerInfo to get the position of the mouse cursor without any thread creation, caching, synchronization, etc...

Julien Gouesse | Personal blog | Website

|

|

In reply to this post by gouessej

Hello,

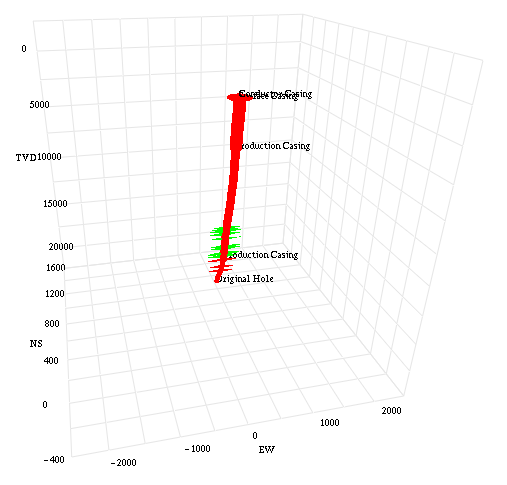

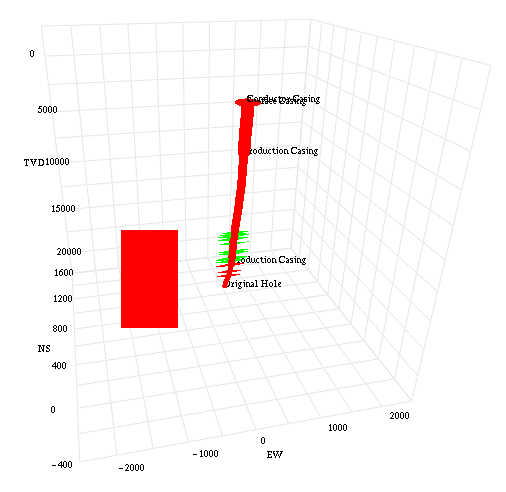

What I aim to do is to select a part of the 3D in the original picture by a rectangle, then I can get the TVD information of that selected part.  Now, I can draw a rectangle according to the mouse click and drag, see add-a-rectangle picture. Now, I can draw a rectangle according to the mouse click and drag, see add-a-rectangle picture.  But it isn't in the same plane with the selected part. But it isn't in the same plane with the selected part.

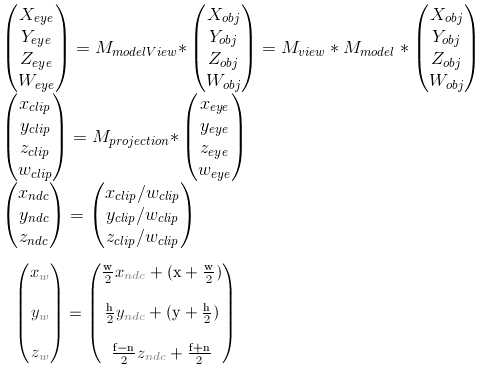

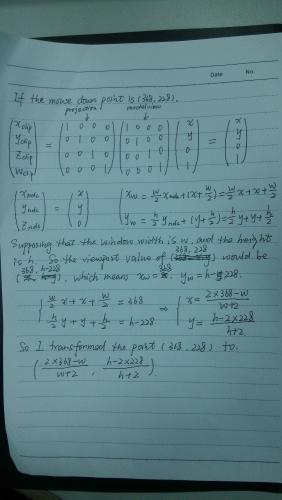

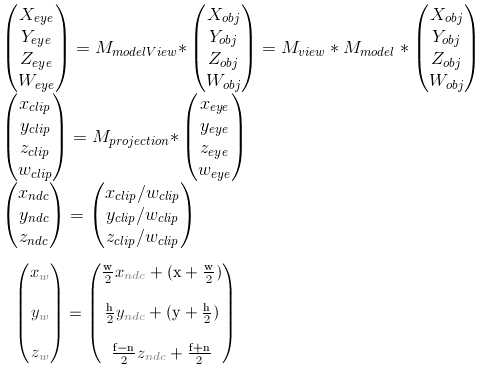

About the first email. I was using the immediate mode to draw a rectangle. May be I didn't make myself understood. The following matrix transformation is how to transform a model coordinate system to a screen coordinate system. But the points generated in mouse click and drag are based on screen coordinate system not model coordinate system. So if I use gl2.glBegin(GL2.GL_QUADS); gl2.glVertex2f(mouseDownX, mouseDownY); gl2.glVertex2f(mouseDownX, mouseMoveY); gl2.glVertex2f(mouseMoveX, mouseMoveY); gl2.glVertex2f(mouseMoveX, mouseDownY); gl2.glEnd(); , which means regarding the screen values as model values. That would be wrong. Later, I figured out a way. First I set the modelview matrix and projection matrix to identity matrix, then please see calculate picture.  Am I right in this way? Am I right in this way?

|

|

OpenGL is about space transformations, this makes things *relatively* easier.

http://web.archive.org/web/20140106105946/http://www.arcsynthesis.org/gltut/Positioning/TransformPipeline.svg when you draw the rectangle you are in window space and what you want to do is retrieving the world space coordinates (I guess). Then you have to apply the inverse transformation, this is usually called unproject There should be a lot of references on the net This looks right, you go from object (model) space to window space. For example, if model matrix is the matrix that transforms from model to world space, the inverse, model^(-1), transforms from worlds to model space. |

|

Did you see that red rectangle? it is not in the same plane of the red cylinder. I want to draw a rectangle as a selection of a part of the red cyclinder. Do you have any ideas about this?

|

Yep, which projection type are you using? When you unproject you have to pass the position in window space (x, y, z), x and y are easy, but what matters to you here is the depth value, that is z. z ranges from 0 (near plane) to 1 (far plane). This means if click on p0 (x0, y0) and you unproject passing (x0, y0, 0) you will get the position of your click on the near plane in object space. I guess the easiest way for you is to set boundaries when you are axis aligned. this means when you look at the red cylinder and the walls of the grid are either parallel or perpendicular to you. Then you click on two points, one minimum p0 and one max p1. This defines a 3d space between (x0, y0, z0) and (x1, y1, z1), you may want to do this in world space. Now, you can store this parameters in your program/shader and when you render, if your world space position vec3 world(x, y, z) fall between this range, that means if, and only if: world.x > x0 && world.x < x1 && world.y > y0 && world.y < y1 && world.z > z0 && world.z < z1 then you are in the selected area and you will render your fragment based on your wishes |

|

I am using glu.gluPerspective(60.0f, (float) width / (float) height, 1.0f, 100.0f);

set boundaries when you are axis aligned. this means when you look at the red cylinder and the walls of the grid are either parallel or perpendicular to you What's that mean? I don't understand. |

it means for example when you are looking in the negative z direction on world space In the image, when your middle finger is pointing to you http://organis.org/X/vilbrandt_files/div00.jpg |

|

In reply to this post by elect

How to set z of windows' coordinate?

|

|

Uhm, wait, what do you want to do exactly once you select part of the cylinder?

|

|

To get the TVD(y-axis) information of the selected part so that my co-worker can show the inside details of the selected part by drawing a 2D graph.

|

|

If the cylinder is always more or less like that, you can calculate a sort of center of gravity p_c(x, y, z) and then use the z component of this point as depth

|

|

If the clicking point has been rendered, then I can get z-depth by gl2.glReadPixels(sx, viewportMatrix[3] - sy, 1, 1, GL2.GL_DEPTH_COMPONENT, GL2.GL_FLOAT, FloatBuffer.wrap(winZ)). Else I get 1.0, so if I use 1.0 to calculate the corresponding point on world space, the result might be wrong.

|

|

Calculate the center of gravity

p_c(x, y, z) in model space and transform it in windows space. When you select your rectangle, if p_min is your minimum point and p_max the maximum, take one point on the upper line, such as pUp(p_max.x - p_min.x, p_max.y) and one point on the lower line, such as pDown(p_max.x - p_min.x, p_min.y) and then unproject these two points using the p_c.z coordinate. It'd be like selecting your cylinder at the slice that correspond to its p_c |

«

Return to jogl

|

1 view|%1 views

| Free forum by Nabble | Edit this page |