Seeking Help with OpenGL Depth Buffer Issue

|

Hello,

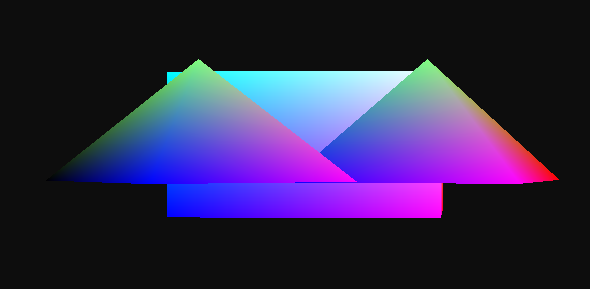

I'm currently having a problem where I seem to be doing something incorrectly, but I've not been able to identify the cause. (It might be something silly I've overlooked...) Basically, I'm drawing several objects onto a scene, but the objects show up in the order in which they're rendered (i.e. last one to render shows in front). See picture below for example. I'm using the latest release of JOGL (Sept. 2014), too. On the code side, I have depth testing enabled and I'm clearing it before drawing the frame. Some of the code is below: @Override The DebugGL4 trace shows that no failures or error reports. One difference between my code and other code samples is that, since I'm rendering different objects, I have different VBOs. The code to render a single frame follows: @Override This is what the drawing method for the Shape3D objects looks as follows: private void init(GL4 gl) { The objects are at known positions:

They should both be getting covered by the cube, but that's clearly not happening. I'm still not sure what I may be doing wrong and, hopefully, someone can help me out with a fresh set of eyes. I can share more code if necessary, but these seemed to be the more relevant parts. Printing the capability data, I get: GLCapabilities caps = new GLCapabilities(GLProfile.getDefault(GLProfile.getDefaultDevice())); The video card is nvidia gtx 770i on driver 331.38 on Kubuntu 14.04 AMD64. Thanks for the help in advance. |

|

At a first glance the code you show looks ok. But we don't see the vertex definition or the shader code so it's hard to say if you even write different Z-values or if they all are the same when gl_Position is set in the shader.

|

|

Hi jmaasing,

This is the vertex definition that gets sent to the VBO for one of the objects. The other object simply has values for a different shape, but still x, y, z and no w, which is left to the shader as a default value. final float[] vertices = new float[] {

// x, y, z

-0.25f, 0.25f, -0.25f,

-0.25f, -0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

0.25f, 0.25f, -0.25f,

-0.25f, 0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

0.25f, -0.25f, 0.25f,

0.25f, 0.25f, -0.25f,

0.25f, -0.25f, 0.25f,

0.25f, 0.25f, 0.25f,

0.25f, 0.25f, -0.25f,

0.25f, -0.25f, 0.25f,

-0.25f, -0.25f, 0.25f,

0.25f, 0.25f, 0.25f,

-0.25f, -0.25f, 0.25f,

-0.25f, 0.25f, 0.25f,

0.25f, 0.25f, 0.25f,

-0.25f, -0.25f, 0.25f,

-0.25f, -0.25f, -0.25f,

-0.25f, 0.25f, 0.25f,

-0.25f, -0.25f, -0.25f,

-0.25f, 0.25f, -0.25f,

-0.25f, 0.25f, 0.25f,

-0.25f, -0.25f, 0.25f,

0.25f, -0.25f, 0.25f,

0.25f, -0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

-0.25f, -0.25f, -0.25f,

-0.25f, -0.25f, 0.25f,

-0.25f, 0.25f, -0.25f,

0.25f, 0.25f, -0.25f,

0.25f, 0.25f, 0.25f,

0.25f, 0.25f, 0.25f,

-0.25f, 0.25f, 0.25f,

-0.25f, 0.25f, -0.25f

};The vertex shader code is below. Note that although I have in vec4 position; the documentation states that missing components (e.g. w) get set to 1.0 by default. This behaves the same as using a in vec3 position; and then vec4(position, 1.0) for the calculations instead. I had already tried both alternatives, just in case:

#version 440 core

layout (location = 0) in vec4 position;

out VS_OUT

{

vec4 color;

} vs;

uniform mat4 model_matrix;

uniform mat4 view_matrix;

uniform mat4 proj_matrix; // holds perspective projection

void main()

{

gl_Position = proj_matrix * view_matrix * model_matrix * position;

vs.color = position * 2.0 + vec4(0.5, 0.5, 0.5, 0.0);

}The fragment shader code is the following:

#version 440 core

out vec4 color;

in VS_OUT

{

vec4 color;

} fs;

void main()

{

color = fs.color;

}Perhaps you'll see something I've been missing for a while. Thanks again for the help. PS: How do you reply to a thread via email? I just tried it and it ended up posting a new thread (which I deleted). |

|

Is there, perhaps, some piece of code that someone thinks might be more helpful in trying to root-cause this issue? Please let me know if that's the case.

Thanks. |

|

I can't spot anything obvious but that doesn't mean it isn't something obvious, it usually is :-)

you can set the vertex color to the position.z and see if they are indeed different. Might help in tracking down if there is an error in the matrices or something in the depth testing. |

|

If it's truly obvious, then it must've bitten my face off a thousand times and I've not yet found what it could be :(

I've already tried setting the color to the gl_FragCoord.z value in the fragment shader, which according to documentation, has the depth value of the current fragment being processed (not the depth value currently in the depth buffer). If I change the frag shader color output line to any of these alternatives: color = vec4(gl_FragCoord.x, gl_FragCoord.y, gl_FragCoord.z, 1.0); // or color = vec4(gl_FragCoord.z, gl_FragCoord.z, gl_FragCoord.z, 1.0); ...then everything shows up white. I guess this specific result was kind of expected since changing the glDepthFunc to GL_LESS seems to prevent anything from getting written into the frame buffer and nothing gets rendered, but "works" with GL_EQUAL and GL_LEQUAL. I've been chasing this issue on-n-off (work full time, so don't necessarily get the chance every night) for some time now (2.5+ weeks), and I usually spend my time before I try asking for help on the forum. I've done additional reading on depth buffer/testing, but the one that seems to go into a bit more detail is this one[1], yet nothing has been useful so far. (My other reading resources have been offline --i.e. books.) Even the 8th Ed of the OpenGL "Red Book" barely skims through it and doesn't seem to share anything that I don't already seem to understand. I guess that's what has made this issue problematic to root-cause: it seems to be so simple and straightforward that not even the reference books spend too much time on that topic. I'd at least be interested to see if others see the same result I am. (I'd like to confirm that the issue is actually on my code and not something else...) I've attached the code here[2]. In the current implementation, the object's are set to be at known locations, so that they're stationary and we can know what the end result should look like. If anyone could take a few minutes to try this, I'd appreciate it. For the project, I actually decided to use a graphics library posted at the CSUS web site[3], which handles the Point, Vector, and Matrix-related classes. (This is needed to run the project.) Those have been tested and are very unlikely to be related. Thanks again in advance for anyone willing/able to help out. [1] http://www.arcsynthesis.org/gltut/Positioning/Tut05%20Overlap%20and%20Depth%20Buffering.html [2] test-proj.gz [3] http://athena.ecs.csus.edu/~gordonvs/155/graphicslib3D.zip |

|

Try the following. The darker triangle should always be in front (darker = less depth value). You can try turning off depth testing and see the difference. At least that is what happens on my MacBook with AMD graphics.

/*

DO WHAT THE FUCK YOU WANT TO PUBLIC LICENSE

Version 2, December 2004

Copyright (C) 2014 Johan Maasing

Everyone is permitted to copy and distribute verbatim or modified

copies of this license document, and changing it is allowed as long

as the name is changed.

DO WHAT THE FUCK YOU WANT TO PUBLIC LICENSE

TERMS AND CONDITIONS FOR COPYING, DISTRIBUTION AND MODIFICATION

0. You just DO WHAT THE FUCK YOU WANT TO.

http://en.wikipedia.org/wiki/WTFPL

*/

package nu.zoom.corridors.client;

import com.jogamp.common.nio.Buffers;

import com.jogamp.newt.event.WindowAdapter;

import com.jogamp.newt.event.WindowEvent;

import com.jogamp.newt.opengl.GLWindow;

import com.jogamp.opengl.util.Animator;

import com.jogamp.opengl.util.glsl.ShaderCode;

import com.jogamp.opengl.util.glsl.ShaderProgram;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import java.nio.FloatBuffer;

import javax.media.nativewindow.WindowClosingProtocol;

import javax.media.opengl.DebugGL4;

import javax.media.opengl.GL;

import javax.media.opengl.GL4;

import javax.media.opengl.GLAutoDrawable;

import javax.media.opengl.GLCapabilities;

import javax.media.opengl.GLEventListener;

import javax.media.opengl.GLException;

import javax.media.opengl.GLProfile;

import javax.media.opengl.TraceGL4;

/**

*

* @author Johan Maasing <johan@zoom.nu>

*/

public class TriangleSample {

// http://www.opengl.org/wiki/Vertex_Specification

final int[] vas = new int[1];

final int[] vbos = new int[1];

private int shaderPosition;

private int flatColorShaderProgram = 0;

private Animator animator;

private GLWindow glWindow;

private boolean windowDestroyed = false;

private static final float[] two_triangles = new float[]{

-1.0f, -1.0f, 0.1f,

1.0f, 1.0f, 0.1f,

0.0f, 1.0f, 0.1f,

1.0f, -1.0f, 0.7f,

0.0f, 1.0f, 0.7f,

-1.0f, 1.0f, 0.7f

};

private final String vertShader

= "#version 410 core\n"

+ "in vec3 position;\n"

+ "out vec2 uv ;\n"

+ "void main(void)\n"

+ "{"

+ " uv = (position.xy + 1.0f)/2.0f;"

+ " gl_Position = vec4(position, 1.0f) ; \n"

+ "}";

private final String flatColorfragShader

= "#version 410 core\n"

+ "in vec2 uv;\n"

+ "layout(location = 0) out vec4 fragColor ;\n"

+ "void main(void)\n"

+ "{"

+ " fragColor = vec4(gl_FragCoord.z,gl_FragCoord.z,gl_FragCoord.z, 1.0) ; \n"

+ "}";

public static void main(String[] args) throws Exception {

TriangleSample app = new TriangleSample();

app.run();

}

private void stop(String msg) {

if (this.animator != null) {

this.animator.stop();

}

if (this.glWindow != null) {

this.windowDestroyed = true;

this.glWindow.destroy();

}

System.out.println(msg);

}

private void run() throws Exception {

final GLCapabilities caps = new GLCapabilities(GLProfile.get(GLProfile.GL4));

caps.setBackgroundOpaque(true);

caps.setDoubleBuffered(true);

caps.setDepthBits(8);

caps.setSampleBuffers(false);

this.glWindow = GLWindow.create(caps);

this.glWindow.setTitle("FBO Test");

this.glWindow.setSize(640, 480);

this.glWindow.setUndecorated(false);

this.glWindow.setPointerVisible(true);

this.glWindow.setVisible(true);

this.glWindow.setFullscreen(false);

this.glWindow.setDefaultCloseOperation(WindowClosingProtocol.WindowClosingMode.DISPOSE_ON_CLOSE);

this.glWindow.addWindowListener(new WindowAdapter() {

@Override

public void windowDestroyNotify(WindowEvent we) {

if (!windowDestroyed) {

stop("Window destroyed");

}

}

});

this.glWindow.addGLEventListener(new GLEventListener() {

@Override

public void reshape(GLAutoDrawable drawable, int x, int y, int width, int height) {

GL gl = drawable.getGL();

gl.glViewport(x, y, width, height);

}

@Override

public void init(GLAutoDrawable drawable) {

GL gl = drawable.getGL();

if (gl.isGL4core()) {

drawable.setGL(new TraceGL4(new DebugGL4(gl.getGL4()), System.out));

GL4 gL4 = drawable.getGL().getGL4();

try {

TriangleSample.this.init(gL4);

} catch (IOException ex) {

stop(ex.getLocalizedMessage());

}

} else {

stop("Not a GL4 core context");

}

}

@Override

public void dispose(GLAutoDrawable drawable) {

}

@Override

public void display(GLAutoDrawable drawable) {

GL4 gL4 = drawable.getGL().getGL4();

TriangleSample.this.display(gL4);

}

});

this.animator = new Animator(glWindow);

this.animator.start();

}

private void init(GL4 gl) throws IOException {

gl.glClearColor(1.0f, 0.0f, 1.0f, 1.0f);

gl.glClearDepthf(1.0f);

gl.glEnable(GL4.GL_CULL_FACE);

gl.glCullFace(GL4.GL_BACK);

gl.glFrontFace(GL4.GL_CCW);

gl.glEnable(GL4.GL_DEPTH_TEST);

gl.glDepthFunc(GL4.GL_LEQUAL);

// Compile and link the shader then query the shader for the location of the vertex input variable

final ShaderCode vertexShader = compileShader(gl, vertShader, GL4.GL_VERTEX_SHADER);

final ShaderCode flatColorFragmentShader = compileShader(gl, flatColorfragShader, GL4.GL_FRAGMENT_SHADER);

this.flatColorShaderProgram = linkShader(gl, vertexShader, flatColorFragmentShader);

this.shaderPosition = gl.glGetAttribLocation(this.flatColorShaderProgram, "position");

// Create the mesh

createBuffer(gl, shaderPosition, two_triangles, 3);

}

public ShaderCode compileShader(final GL4 gl4, final String source, final int shaderType) throws IOException {

final String[][] sources = new String[1][1];

sources[0] = new String[]{source};

ShaderCode shaderCode = new ShaderCode(shaderType, sources.length, sources);

final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final boolean compiled = shaderCode.compile(gl4, System.err);

if (!compiled) {

System.err.println("Unable to compile " + source);

System.exit(1);

}

return shaderCode;

}

private int linkShader(GL4 gl, final ShaderCode vertexShader, final ShaderCode textureFragmentShader) throws GLException {

ShaderProgram program = new ShaderProgram();

program.init(gl);

program.add(vertexShader);

program.add(textureFragmentShader);

program.link(gl, System.out);

final boolean validateProgram = program.validateProgram(gl, System.out);

if (!validateProgram) {

System.err.println("Unable to link shader");

System.exit(1);

}

return program.program();

}

private void display(GL4 gl) {

// Clear the default framebuffer

gl.glBindFramebuffer(GL4.GL_DRAW_FRAMEBUFFER, 0);

gl.glClear(GL4.GL_COLOR_BUFFER_BIT | GL4.GL_DEPTH_BUFFER_BIT);

gl.glUseProgram(this.flatColorShaderProgram);

gl.glBindVertexArray(this.vas[0]);

gl.glEnableVertexAttribArray(this.shaderPosition);

gl.glDrawArrays(GL4.GL_TRIANGLES, 0, 6);

}

public void createBuffer(final GL4 gl, final int shaderAttribute, float[] values, final int valuesPerVertex) {

gl.glGenVertexArrays(this.vas.length, this.vas, 0);

gl.glBindVertexArray(this.vas[0]);

gl.glGenBuffers(this.vbos.length, this.vbos, 0);

gl.glBindBuffer(GL4.GL_ARRAY_BUFFER, this.vbos[0]);

FloatBuffer fbVertices = Buffers.newDirectFloatBuffer(values);

final int bufferSizeInBytes = values.length * Buffers.SIZEOF_FLOAT;

gl.glBufferData(GL4.GL_ARRAY_BUFFER, bufferSizeInBytes, fbVertices, GL4.GL_STATIC_DRAW);

gl.glVertexAttribPointer(shaderAttribute, valuesPerVertex, GL4.GL_FLOAT, false, 0, 0);

}

}

|

|

Hi,

I apologize for the delayed response. It was a busy week. I had a chance to get the code to run and, as expected, it displayed the darker triangle in front of the lighter colored one. Disabling depth testing also produces the expected result of the last object to be drawn always being in front. (My project has depth testing enabled, uses the same depth function, and clears the depth buffer on every display, so we seem to be good there.) I modified the sample code you provided in a few ways and I noticed some things that I'll mention briefly. The modifications boil down to making a few things more similar to the project I'm working on. These include: 1. Splitting the triangle vertex position arrays into 2 separate VBOs; 2. Updating the fragment depth values to make one triangle switch between being at the front/back; The full diff for the sample is here: triangle-sample.diff One thing I noticed is that I had to add an offset attribute to the vertex shader of the sample code so that I could use it to update the Z component of the position vector. For this sample code, this does not seem unexpected because their actual positions are not being transformed --i.e. the triangles do not move around within the coordinate space. In my project, however, the objects do move around in 3D space. I guess I still don't expect this is what I really need to do (i.e. add an offset attribute to my vertex shader) because I'm already using a vertex uniform (i.e. model_matrix) to store the transformations for the object I wish to render. Am I wrong to think that this is supposed to be enough? The model_matrix uniform already holds the translation/rotation/scale transformations for the object, so why would I need to add something else? I guess that's why I'm thinking that the root-cause might still be elsewhere... but I'm clearly still missing something here, so help is still appreciated. Thanks in advance. |

|

So there is no issue with depth testing on your video-card - that's good at least :-)

My next guess would be that your math is wrong somewhere. You are right that the shaders in this example skips the transformation you usually do by passing in a model-view-projection matrix as a uniform to the shader. What this example shows is that the depth value by default should be between 0.0 and 1.0, and that you can color the triangles using the glFragCoord.z to see the depth value as color. If your original code would result in "all white" triangles that means your depth value is always >= 1.0 which is a problem. |

The math appears to be ok, though I still keep an eye out for those just in case and I certainly welcome more specific feedback. (I may've been looking at this for long enough to be missing it... just like missing typos in a long essay and you've re-read many times.) I mentioned before that I had tried that already and that the depth values seemed to all be the same (yes, it produces white objects). I also mentioned the behavior difference between using GL_LESS and GL_EQUAL. From what I've read, my understanding is that the [0.0, 1.0] depth range is actually at normalized device coordinate (NDC) space, which is after the 1/w component divisions, etc. These transformations in the pipeline already take care of making sure that the depth values are within the [0.0f, 1.0f] range. (Feel free to correct me if I'm wrong.) Based on the output of gl_FragCoord.z and the behavior with GL_EQUAL/LESS, they all seem to have the same depth value of 1.0f, which would explain why the objects get rendered with the EQUAL function, but not the LESS function while using a depth clear value of 1.0f (b/c no fragments have depth values LESS than 1.0f and fail depth testing and get discarded). I looked at the math in the vertex shader (project2/shader.vert) and the construction of the perspective view/projection matrices (MathUtil) again, but they seem ok. (The MathUtil code is from reference material.) One part of particular interest/concern is in how the VBOs are being used, too. I expected the current translation/position to be the one responsible for affecting fragment depth values...and I can see the position vectors updating as expected by printing the values from the Java code before sending them to the shader via the uniform, but I don't think I've observed the expected effect on the fragment depth value and this is where I feel I've misunderstood something important. Any ideas? |

|

Hi all,

I was able to find the root-cause of the depth testing-related problem I was having. It was the near clip plane value, which was, at some point, (re)set to 0.0. The near clip plane value being set to 0.0 causes OpenGL to happily behave as if there's no depth testing enabled. OpenGL does not raise errors in the case when the clip plane values are outside of their expected range. I had read about this several times and I remember checking for these values specifically a while back. Perhaps I had the change in a different branch and I threw it away at some point so it never occurred to me that it would be there. Anyway, I still managed to keep the issue there somehow, but it's now fixed 😐 Regards, -x PS: The math was correct ;) |

I guess it depends on your definition of correct if you divide by 0  good to hear it's working now. Can be hard to track down those things, I've been chasing a triangle that for the last weeks always has a vertex at 0,0,0 even though I load a model from file that do not have such a triangle and I just can't find out why it happens :) good to hear it's working now. Can be hard to track down those things, I've been chasing a triangle that for the last weeks always has a vertex at 0,0,0 even though I load a model from file that do not have such a triangle and I just can't find out why it happens :)

|

Well, since I build my own view and projection matrices, that's what I had in mind when I wrote it :) I was trying to rule out math problems in how those matrices were getting built, but they were fine. That said, the zNear value is not used for division, by itself, when creating a perspective matrix, so there's no division by zero unless the zNear and zFar plane values are the same. What divide by zero did you have in mind? Yes, it can be difficult to spot and even more frustrating when you actually do. I was able to narrow mine down when I started to remove some of the calculations in the vertex shader to see where it would 'go bad'. (At this time, I was thinking that I had some math error somewhere and I was trying to catch the bad matrix. That was not the case this time around, but it did lead me in the right direction.) gl_Position = proj_matrix * view_matrix * model_matrix * position; When I removed the projection/view matrices from the calculation, depth testing would suddenly work correctly and the object's gl_FragCoord.z values for color would be gray rather than white. I narrowed it down to the projection matrix, and that's when I found that, somehow, I still had a hard-coded 0 for the zNear clip plane. The fix was: projMatrix = MathUtils.createPerspectiveMatrix(60.0f, aspectRatio, 0.0f, 100.0f); // from projMatrix = MathUtils.createPerspectiveMatrix(60.0f, aspectRatio, 0.01f, 100.0f); // to For me, I think the most frustrating thing overall is that OpenGL, instead of raising an appropriate error when it receives an invalid value (say, GL_INVALID_VALUE?? gasp!), it merrily chooses to continue, but behaving in whatever way it deems to be more difficult to debug. -x |

Just so you know, setting small values on the near clip plane is usually avoided due to precision issues, this is a good explanation: http://www.sjbaker.org/steve/omniv/love_your_z_buffer.html OpenGL often do raise an error (depending on driver ofc) when you break the spec. Sometimes you must remember to ask OpenGL about the status of the previosu operation and the way I usually do it is to use JOGL-classes and constructs like these drawable.setGL(new TraceGL4(new DebugGL4(gl.getGL4()), System.out));Although in this case I doubt it would have warned you about writing a very high depth value. There is nothing afaik in the spec. preventing you from writing strange values in this case and that's why it becomes so hard to track down. |

Thanks for the link. It was a good read and I'll keep it around. I was already using them as shown in the project I had attached :) The glGetError checks simply showed everything being "ok". From the OpenGL spec (e.g. gluPerspective doc, though I'm not using this function myself.) states that the value for zNear/zFar must be a positive number. The fact that it does not specify that receiving a non-positive number (e.g. zero or negative) should raise an error (e.g. GL_INVALID_VALUE) seems to suggest that it's undefined behavior at this point and the driver may behave in whatever way it sees fit (read: likely unpredictable). While writing this, I think I realized why OpenGL never raised any errors of any kind. OpenGL might've raised an error for a non-positive zNear value (e.g. GL_INVALID_VALUE) if the function specifically taking those inputs had been used. But it was never used; the perspective matrix was built and then sent to the pipeline using a uniform, so I don't think it could've raised any errors anyway. -x PS: Hindsight 20/20 |

Exactly, OpenGL has no near clip-plane as such (fixed pipeline had but not modern GL) - so the first it saw of the vertex was your shader outputting the position and there is nothing preventing odd values there, that's why I said it must be a problem with the math - I should have been more clear what I meant, hindsight and all that :) |

Re: Seeking Help with OpenGL Depth Buffer Issue

|

Administrator

|

In reply to this post by xghost

On 10/16/2014 08:20 AM, xghost [via jogamp] wrote:

> jmaasing wrote > Just so you know, setting small values on the near clip plane is usually > avoided due to precision issues, this is a good explanation: > http://www.sjbaker.org/steve/omniv/love_your_z_buffer.html > I also added reference to this nice doc in our FloatUtil class: <https://jogamp.org/deployment/jogamp-next/javadoc/jogl/javadoc/com/jogamp/opengl/math/FloatUtil.html#getZBufferEpsilon%28int,%20float,%20float%29> <https://jogamp.org/deployment/jogamp-next/javadoc/jogl/javadoc/com/jogamp/opengl/math/FloatUtil.html#getZBufferValue%28int,%20float,%20float,%20float%29> :) |

«

Return to jogl

|

1 view|%1 views

| Free forum by Nabble | Edit this page |